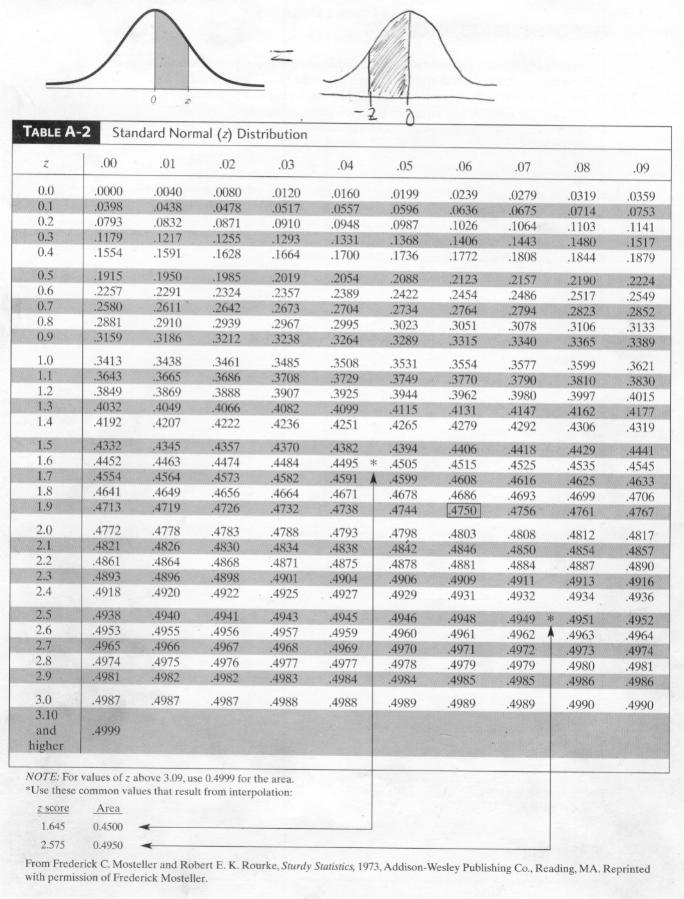

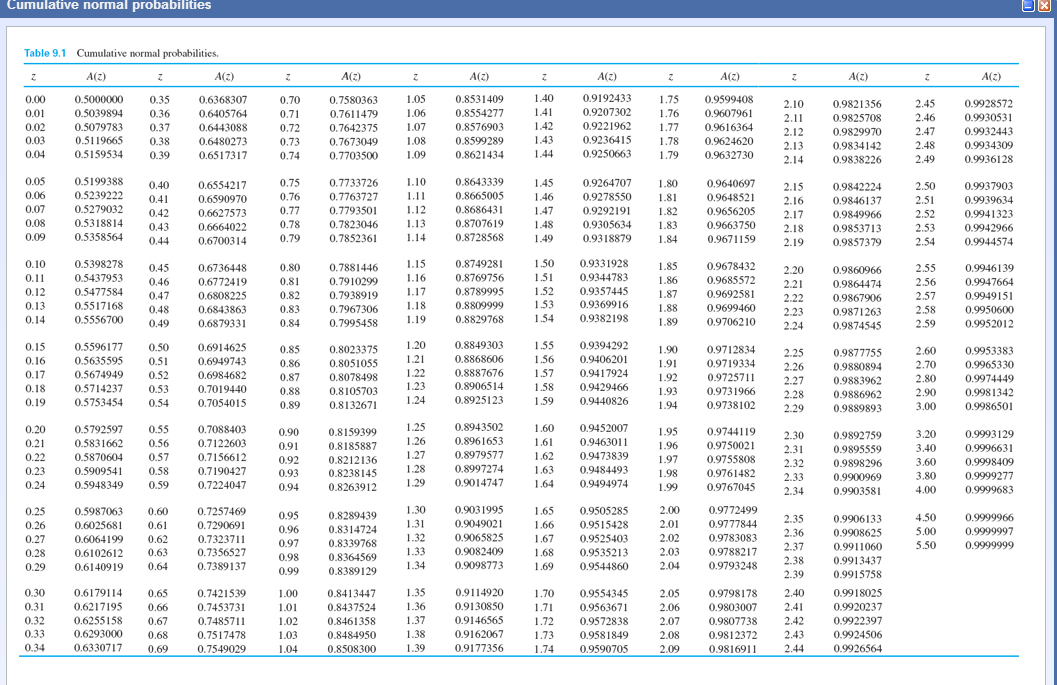

For any given Z-score we can compute the area under the curve to the left of that Z-score. Since the area under the standard curve = 1, we can begin to more precisely define the probabilities of specific observation. After standarization, the BMI=30 discussed on the previous page is shown below lying 0.16667 units above the mean of 0 on the standard normal distribution on the right. However, when using a standard normal distribution, we will use "Z" to refer to a variable in the context of a standard normal distribution.

To this point, we have been using "X" to denote the variable of interest (e.g., X=BMI, X=height, X=weight). For the standard normal distribution, 68% of the observations lie within 1 standard deviation of the mean 95% lie within two standard deviation of the mean and 99.9% lie within 3 standard deviations of the mean. The standard normal distribution is centered at zero and the degree to which a given measurement deviates from the mean is given by the standard deviation. The least squares solution is computed using the singular valueĭecomposition of X.The standard normal distribution is a normal distribution with a mean of zero and standard deviation of 1. Parameter: when set to True Non-Negative Least Squares are then applied.ġ.1.1.2. LinearRegression accepts a boolean positive Quantities (e.g., frequency counts or prices of goods). It is possible to constrain all the coefficients to be non-negative, which mayīe useful when they represent some physical or naturally non-negative This situation of multicollinearity can arise, forĮxample, when data are collected without an experimental design. To random errors in the observed target, producing a large When features are correlated and theĬolumns of the design matrix \(X\) have an approximately linearĭependence, the design matrix becomes close to singularĪnd as a result, the least-squares estimate becomes highly sensitive

The coefficient estimates for Ordinary Least Squares rely on the from sklearn import linear_model > reg = linear_model.

0 kommentar(er)

0 kommentar(er)